Text processing in NLP (Natural Language Processing) refers to the manipulation, analysis, and transformation of textual data to extract useful information and derive insights. It involves various techniques and methodologies to preprocess and transform raw text into a format suitable for NLP tasks such as text classification, sentiment analysis, named entity recognition, machine translation, and more. Text processing can be done using:

1)Regular Expressions

Basic text processing requires the identification of sentence and word boundaries (tokenization).Often it is beneficial to reduce the number of different word forms and token (lemmatization / stemming). To achieve that regular expressions can be used.

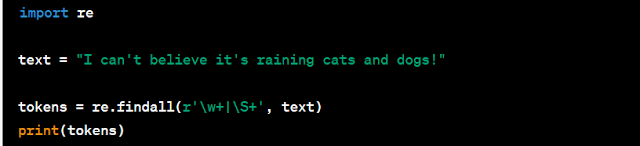

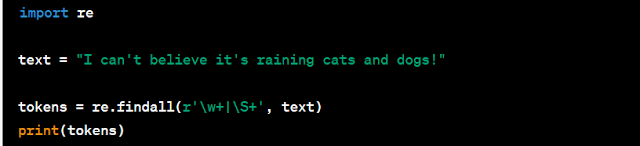

Tokenization: Regular expressions can be utilized to define custom tokenization rules based on specific patterns in the text.

Output:

Lemmatization and Stemming: Regular expressions can be useful for pattern matching during lemmatization or stemming.

Output:

It involves transforming raw text into a standardized and consistent format to reduce the variations in textual data. By doing so, improve the accuracy of subsequent NLP tasks. Here are some common techniques used for text normalization in NLP.

Converting to Lowercase:

Output:

Removing Punctuation:

Handling Contractions:

Removing Stop Words:

Output:

Lemmatization and Stemming: Examples are given above.

3)Minimal Editing Distance(med)

It is a way to measure the similarity of two strings. It is defined as the smallest number of (editing) operations (substitution, deletion, insertion) needed to convert one string into the other. For example

String A : Intention

Sting B : Execution

med : 5 (delete i, substitute e for n, substitute x for t, insert c, substitute u for n)

Med algorithm in python:

4) N-gram Language Models:

An N-gram language model is a statistical model used in NLP to predict the probability of a sequence of words based on the occurrence of smaller word sequences called N-grams. An N-gram is a contiguous sequence of N words. N-gram language models are built on the assumption that the probability of a word depends on the previous N-1 words in the sequence. By analyzing large amounts of text data, these models learn the likelihood of specific N-grams occurring and use that knowledge to generate or predict text.

For Example:

In this example, a trigram (N=3) language model has been built using the given corpus. Then the model is used to generate text by providing a prefix (["i", "love"]) and sampling the next word based on the learned probabilities.

5) Smoothing:

It is used in NLP to address the problem of zero probabilities or sparse data in language. It helps to handle unseen or infrequent N-grams by redistributing probabilities from observed N-grams to unseen ones. Smoothing is particularly useful when estimating probabilities from limited training data.

Add-k Smoothing (Laplace Smoothing): Add-k smoothing adds a small constant (k) to the count of each N-gram in the training data. This ensures that no N-gram has a zero probability and reduces the impact of unseen N-grams. The value of k is usually set to 1, but it can be adjusted based on the data and the level of smoothing desired.

Interpolation Smoothing:

Interpolation combines probabilities from different N-gram orders to smooth the language model. It assigns weights to the probabilities of different N-gram orders and combines them to calculate the final probability. This technique is commonly used in backoff models, where the probabilities of higher-order N-grams are used when available, and lower-order N-grams are used as fallbacks.

Kneser-Ney Smoothing: Kneser-Ney smoothing is a widely used technique that assigns probabilities to N-grams based on their continuation probability. It estimates the probability of an N-gram based on the number of unique preceding (N-1)-grams it has followed in the training data. Kneser-Ney smoothing is particularly effective in capturing the context and has been shown to improve the performance of language models.

Huge language models and stupid backoff: It refers to a combination of two techniques used in text processing and natural language processing (NLP): the use of large language models and the application of a simple smoothing technique called "stupid backoff.

Comments

Post a Comment